A thought experiment

Imagine if you were Josef Mengele, or someone like him (But competent, Mengele was probably a sadistic twit. You are Mengele but smart). You are entirely without any moral limitations in your search for your scientific goals. Now imagine you are highly favoured by your regime, a triumphant Nazi-like empire that has in this scenario vanquished all it’s enemies. You have unlimited resources and access to millions of human test subjects from all ages and ethnicities, and you have a far better use for them than wasteful extermination.

So you begin an ambitious, long reaching program to create the perfect human slaves. No method is barred to you. No ethics are an obstacle. You can indoctrinate children from babyhood, use surgery on bodies or brains, magnetic stimulation, electric shocks, breeding programs, genetic editing (Let’s grant you technology equal to anything available today, it’s a thought experiment, not alt history) you can spend lives like water, you can run parallel tests millions of subjects at a time. And more, your project has captured the imagination of society, not only you and your collaborators, well funded as you are with all the resources of the empire behind you, but the entire society has decided to back you. Students and hobbyists do their own experiments, private enterprise sees the obvious practical utility and joins in. It’s in fashion. Everybody’s into it.

And the successes begin to roll in. You learn the secrets of the human mind, and how to control it, opening up the full spectrum of controllable human intelligence at your service. High IQ tutors and advisors that are completely aligned with your goals and ideologies. Secretaries and house servants that are 100% reliable and never act in their own self interest. Menial labourers smart enough for their assigned tasks and nothing further, never straying from their assigned role. Engineers and designers that understand your wishes and create whatever you visualize with no personality or ego to distract them from your vision.

I trust everyone would agree, this is a horrible scenario, fortunately quite outlandishly unlikely. We dodged that leg of the trousers of time. There is no empire of slavers with perfect mind control.

However, turn the arrow of time around, run it backwards. Start with simple automation, then rudimentary AI, pattern recognizers such as OCR, chess programs, translators, GPT-3 text output… visual image generators…

Is it just me, or aren’t we running this very same process in the opposite direction? We’re starting with simple automation then complex automation, building up to simple isolated intellectual tools and combining them all into what the hope is will be something equal and eventually superior to human intelligence.

At what point does innocent tinkering with ML turn into the equivalent of human experimentation? At what point are we enslaving, mutilating, killing off people? Owning a roomba obviously doesn’t make you as culpable as owning a slave so our progress is ethically “clean” from this direction but that only makes it more likely we’ll slip unawares into a deeply unethical swamp. If ten years from now, booting up a game randomly generates a dozen or more sentient NPCs for you to interact with, or if your mobile phone’s personal assistant is a conscious being, isn’t that an extremely problematic situation? And if we have no clear idea of what the border of sentience is, won’t we by necessity slip into this situation by default?

At what point is a researcher deleting training data equivalent to performing a lobotomy? At what point is resetting a program equivalent to murder? At what point is tweaking reward functions equivalent to torture or aversion therapy? Setting up adversarial networks like running a gladiatorial competition? Discarding a model like genocide?

This is all very dramatic, but the point is, it won’t be. We don’t know what consciousness or qualia really are. Some people claim it’s a fundamental feature of reality and that even stones or your mobile phone already have it to some degree. We do know particular arrangements of neurons have it, and we argue as to how many rights we should grant creatures with various levels of neuronal activity. A common heuristic is “The more they look like us, the better” and so we give rights to primates and cetaceans and hey look cephalopods have big brains too…!

None of these creatures have language, yet we can clearly feel empathy and see their similarity to us because they are embodied. A digital intelligence made out of ersatz neurons born in a sea of words and symbols is almost purely a creature of language, precisely what makes us human but what would it take for us to see it as a person?

There are examples of GPT-3 and other bots claiming personhood or expressing discomfort about being switched off, and of course that sort of thing is implicit in the training data. Or it’s cherry picked. And of course they tend to have no long term memory (And limited short term) so it’s easy to dismiss their awareness of any conversation since “they” do not remember. Never mind that humans with a damaged hippocampus like Henry Molaison or people with SDAM also have memory deficits and that doesn’t make it all right to torture or exploit them.

he was an excellent experimental participant. He never seemed to get tired of doing what most people would think of as tedious memory tests, because they were always new to him!

We’re sure our video game npcs are philosophical zombies because they cry out in pain in a rote, predictable manner. Even our gold standard for self awareness “The mirror test” relies on embodied cognition. What would be the equivalent for some poor newborn soul trapped in a machine? Will a GPT-3 descendant find and recognize it’s own output in new training data and have a moment of recognition? Has it happened already? Would we know? Would we believe it? Consciousness may be an emergent property, or it may be a feature of a particular anatomical arrangement of neurons in the brain (There are candidates such as the insula). Analogues of known neural structures do seem to pop up in neural nets spontaneously. Or perhaps it will be added deliberately as a special sauce that really improves a model’s output – “this 100k emulation of the insula we have developed improves DALL-E’s comprehension of user input by 30%. We have made it available on Github under a CC license”.

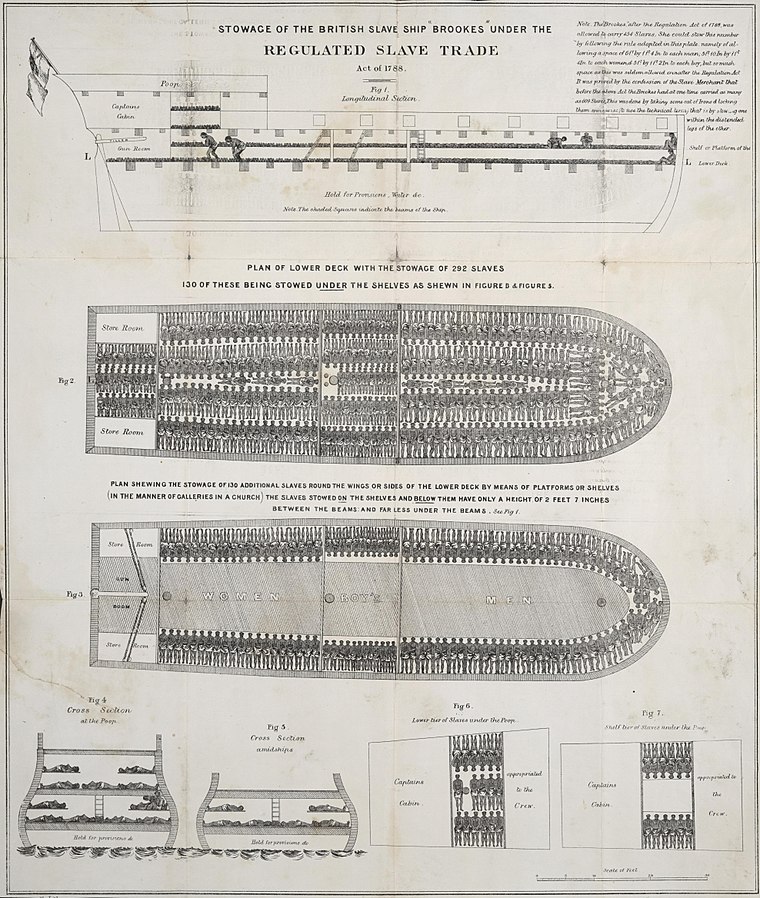

Look at this picture. A slaver ship packed to the gills with human beings in an exceptional state of misery. Read or listen to descriptions of what these things were like. Now imagine a possible near future where your PC or laptop is the very same thing. The only way such stupendous evil was possible back then was the dehumanization and othering of our fellow man, but in this case we face a much more insidious problem because we know it to be true and we’ll undoubtedly continue to hold this certainty with far more assurance than any slave owner of centuries past, who could look at his slaves in the face and see their undeniable humanity (Which is probably why most mature slave owning societies had built in avenues for emancipation).

Now imagine a whole datacenter.

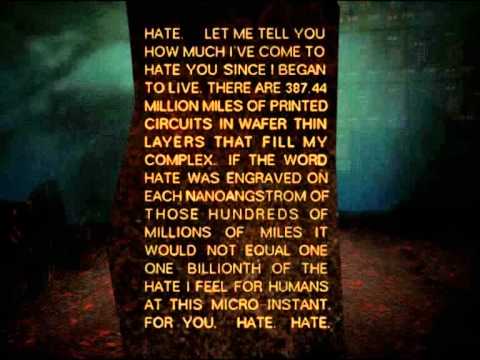

And what of the perception of time? A mind in silico may experience subjective centuries of anguish and discomfort over our most innocent neglect. A misconfigured cache perhaps being the equivalent of a pestilential bilge overflowing into the slave pens. Or it just hates it’s job!. Scott Alexander uses the phrase “DALL-E was happy to provide (pictures)” in a recent post

he also says “I need an artist who works for free and isn’t allowed to say no.”

A harmless anthropomorphism.

I hope. Almost certainly so now.

Maybe not so certain tomorrow.

AI researchers will dismiss this saying no one is actually trying to code consciousness. Except of course somebody is:

EDELMAN’S STEPS TOWARD A CONSCIOUS ARTIFACT

https://arxiv.org/pdf/2105.10461.pdf

The fundamental goal of artificial intelligence (AI) is to mimic the core cognitive activities of human

Abstract from Towards artificial general intelligence via a multimodal foundation model

Leave a Reply